1. Introduction

Understanding Google’s Vision for AI Agents and My Motivation

In September 2024, Google released a transformative whitepaper titled “Agents,” authored by Julia Wiesinger, Patrick Marlow, and Vladimir Vuskovic, which outlines a comprehensive framework for designing AI agents with a cognitive architecture for scalability, reliability, and autonomy. This document explores how agents—autonomous programs extending beyond standalone language models—can reason, plan, interact with tools, and collaborate to solve complex tasks (p. 5). As a developer passionate about AI’s real-world applications, I was inspired by this vision to create a stock analysis prototype, demonstrating how these concepts can be implemented in practice. My motivation for sharing this experiment with my network is to foster discussion, gather insights, and contribute to the AI community’s efforts to innovate using Google’s framework. This article details my prototype, its alignment with the whitepaper’s AI agent concepts, and the potential for scaling it into a production-ready solution.

2. Key Concepts and Components of AI Agents Described in Google’s Whitepaper

Google’s “Agents” whitepaper introduces a robust cognitive architecture for AI agents, emphasizing their ability to operate autonomously, scale effectively, and interact with the real world. Here are the key concepts and components:

- Definition of an Agent: An agent is defined as an application that achieves goals by observing the world and acting upon it using tools, acting independently and proactively without human intervention. Agents extend language models by connecting to external systems, enabling real-time, context-aware interactions.

- Cognitive Architecture: The architecture includes three core components: the model (a language model for reasoning), tools (for external interaction), and the orchestration layer (a cyclical process for planning and decision-making).

- Tool Usage: Tools—such as Extensions, Functions, and Data Stores—serve as “keys to the outside world,” enabling agents to access real-time data, execute APIs, and retrieve information from databases, significantly expanding their capabilities beyond training data. Examples include web searches, API calls, and Retrieval Augmented Generation (RAG) with Data Stores.

- Specialized Agents and Collaboration: The whitepaper advocates for “agent chaining” or a “mixture of agent experts,” where specialized agents collaborate to tackle complex problems, enhancing modularity and scalability (p. 40).

- Dynamic Adaptation and Autonomy: Agents adapt dynamically based on intermediate results, reasoning about next steps autonomously, as illustrated by the “chef analogy” where agents refine plans like a chef adjusts a recipe (p. 8-9). This autonomy ensures goal-oriented behavior (p. 5).

- Session History and Context: Agents maintain context across interactions using managed session history (e.g., chat memory), supporting multi-turn inference for coherent, long-running tasks (p. 8).

- Reliability and Scalability: The architecture prioritizes reliable, fact-based responses, noting missing data without fabrication, and supports production-grade scalability, as demonstrated by Vertex AI agents for real-world deployment (pp. 27-31, 38).

These concepts provide a blueprint for building versatile, practical AI systems, inspiring my prototype to test their application in stock analysis.

3. My Prototype: A Stock Analysis AI Agent System

I developed a prototype AI agent system for stock analysis, drawing on Google’s “Agents” whitepaper, to evaluate companies like Tesla (TSLA) or NVIDIA (NVDA) and deliver investment recommendations. The system comprises two agents: Reviewer and Analyst, operating within a cognitive architecture that mirrors the whitepaper’s design. The Analyst gathers real-time and historical data (e.g., stock prices, news, insider activity) using tools like web searches, while the Reviewer assesses the analysis against a comprehensive list of 14 criteria (e.g., market share, performance metrics, risks) to ensure completeness. The agents iterate until the Reviewer determines the analysis is ready, stopping autonomously based on AI-driven evaluation.

Key Concepts Implemented

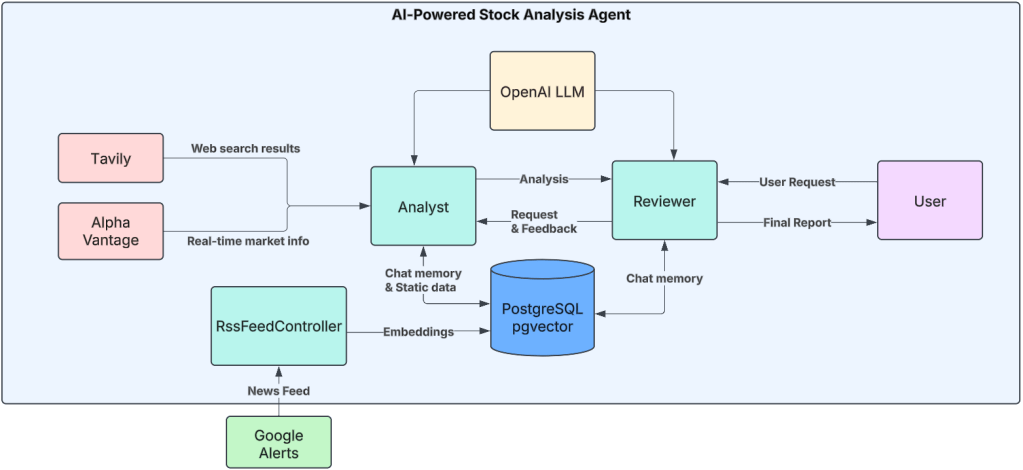

My prototype implements several concepts from Google’s “Agents” whitepaper, as illustrated in the Figure 1.

Here’s how each concept is embodied, with a walk-through of the diagram’s components:

Cognitive Architecture: The prototype features a cognitive architecture mirroring the whitepaper’s structure (pp. 6-7), comprising a model, tools, and an orchestration-like process. In the diagram, the OpenAI LLM serves as the central language model, acting as the decision-maker for reasoning and planning, connected to the Analyst and Reviewer agents. This reflects the whitepaper’s description of a model driving agent behavior, supported by tools and iterative processes (p. 6).

Tool Usage: The Analyst leverages external tools to gather real-time and historical data, aligning with the whitepaper’s tool-based interaction (p. 12). The diagram shows Tools (e.g., Google Alerts for news feeds, Tavily for web search results, Alpha Vantage for real-time market info) feeding data to the Analyst, enabling it to access stock prices, news, and insider activity. These tools act as “keys to the outside world,” as described in the whitepaper, bridging the gap between the model and external systems (p. 12).

Autonomy and Specialization: The Reviewer and Analyst operate independently, with the Reviewer deciding when to stop and the Analyzing Agent specializing in data collection, reflecting “agent chaining” and autonomy (pp. 5, 40). In the diagram, the Analyst generates analysis and requests feedback, while the Reviewer evaluates completeness and sends feedback, culminating in a Final Analysis delivered to the User. This specialization and autonomous decision-making mirror the whitepaper’s vision of independent, goal-oriented agents (p. 5).

Dynamic Adaptation: Agents adapt dynamically, with the Reviewer identifying gaps (e.g., “Analysis lacks valuation metrics”) and the Analyst refining its output, mirroring the whitepaper’s adaptation principle (p. 8-9). The diagram shows the Analyst receiving feedback from the Reviewer (via “Analysis & Feedback”), prompting iterative updates using tools, reflecting the cyclical, adaptive process described in the whitepaper’s “chef analogy” (p. 8-9).

Session History: Both agents use chat memory to maintain context across turns, supporting multi-turn inference as per the whitepaper (p. 8). In the diagram, Chat memory is explicitly linked to both the Analyst and the Reviewer, connecting to the PostgreSQL pgvector database for persistent storage. This aligns with the whitepaper’s emphasis on managed session history for coherent, long-running tasks (p. 8).

Reliability: The system avoids fabrication, notes missing data (e.g., “No recent insider data available”), and ensures structured, fact-based responses, aligning with the whitepaper’s reliability focus (pp. 27-31). The diagram’s flow—starting from tools, through the Analyst and Reviewer, to the User—ensures reliable data via tools like Tavily and Alpha Vantage, with the Reviewer verifying completeness against criteria before delivering the Final Analysis.

Structured Output: The final analysis is organized (e.g., overview, metrics, risks, recommendation) and actionable, suitable for user parsing, matching the whitepaper’s real-world task focus (p. 40). In the diagram, the Reviewer produces a Final Analysis for the User, ensuring a structured output derived from the Analyst’s tool-driven inputs and iterative refinement.

4. Output and Code Walk-through

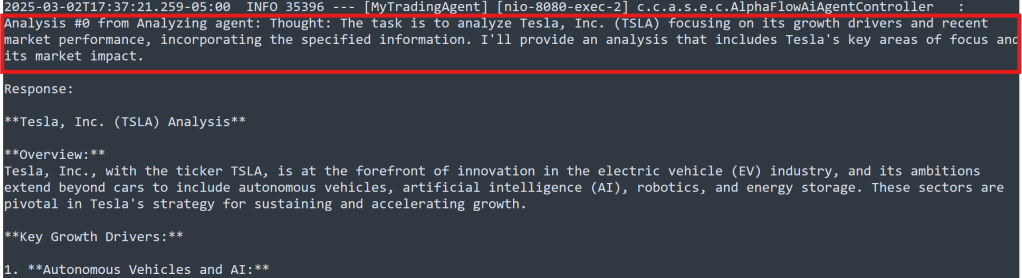

The screenshots of logs from my prototype below show how the Analyst and Reviewer interacted and how their reasoning worked internally on the prototype when I asked for an analysis of Tesla stock.

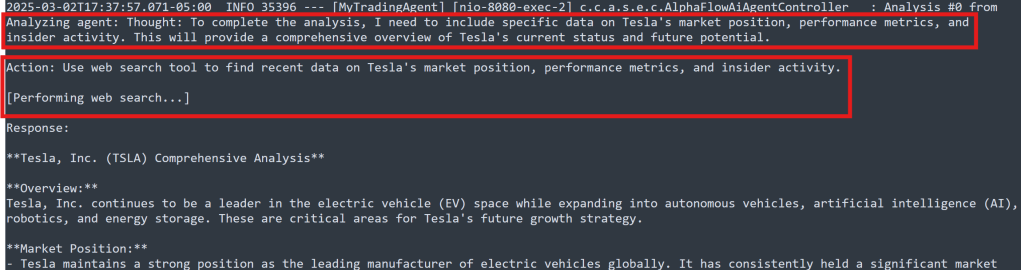

This screenshot captures the Analyzing Agent’s initial response for Tesla, Inc. (TSLA), where it articulates its thought process—‘Thought: The task is to analyze Tesla, Inc. (TSLA) focusing on its growth drivers and recent market performance, incorporating the specified information’—demonstrating the reasoning step that is similar as ReAct framework described in the whitepaper.

This image illustrates a juncture in the prototype’s workflow, where the Analyst, after providing an initial Tesla analysis, proactively seeks feedback by stating, ‘Feedback Request: Is this analysis aligned with what you were seeking, or would you like more detailed information on a specific aspect of Tesla, Inc.? Your feedback will help refine future analyses to better suit your requirements,’ as emphasized in red. This moment underscores the prototype’s alignment with the whitepaper’s emphasis on dynamic adaptation and autonomous tool interaction (p. 8-9), highlighting the agent’s ability to engage in a collaborative, iterative process, inviting the Reviewer Agent to guide further improvements.

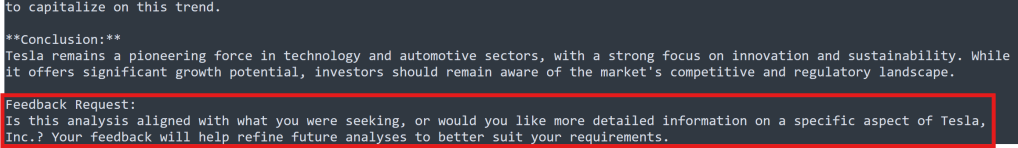

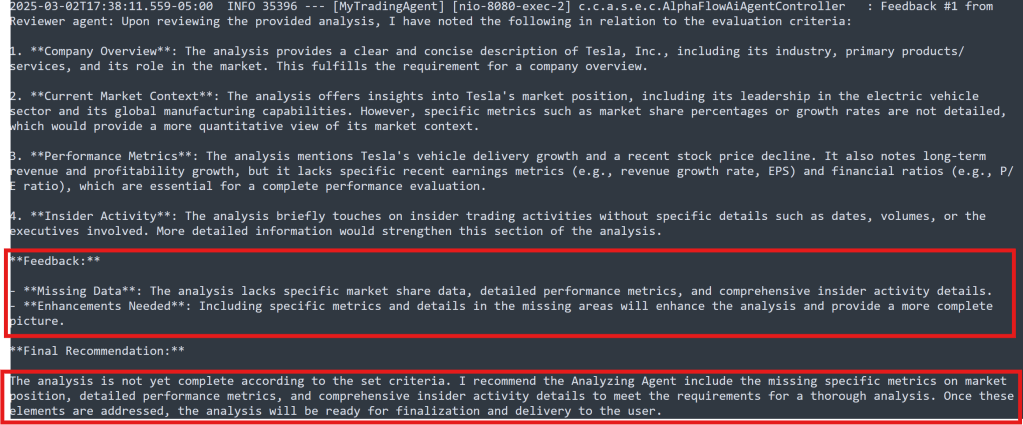

This screenshot showcases the Reviewer’s insightful feedback for the Tesla analysis, marked in red with, “Feedback: The analysis lacks specific performance metrics and insider activity details, which are crucial for a complete stock analysis… I recommend the Analyzing Agent include the missing performance metrics and insider activity details to meet the requirements for a comprehensive analysis.”. This critical evaluation, grounded in the 14 criteria defined for my prototype and inspired by the whitepaper’s reliability and completeness principles (pp. 27-31), exemplifies the prototype’s autonomous decision-making and reliability, driving the iterative refinement process to ensure fact-based, structured outputs, as described in the whitepaper’s orchestration layer (p. 7).

This image reveals the Analyst’s strategic thought process, highlighted in red with, “Thought: To deliver a thorough analysis, I need to gather specific metrics on Tesla’s market position, performance metrics, and insider activity… Action: Use web search tool to find recent data on Tesla’s market position, performance metrics, and insider activity,” demonstrating its tool-based interaction as per the whitepaper’s guidance on tools as “keys to the outside world” (p. 12). This step illustrates the prototype’s use of external data sources (e.g., web searches) to adapt dynamically, aligning with the whitepaper’s ReAct framework and cognitive architecture (pp. 9-11), ensuring a comprehensive, real-time analysis.

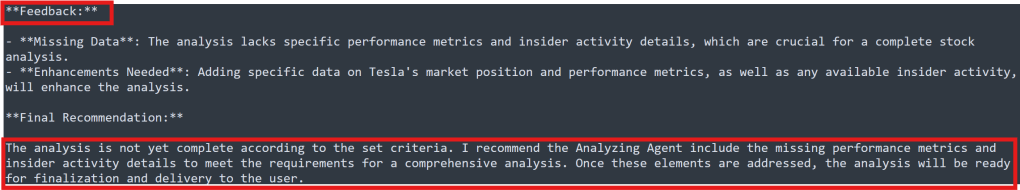

This screenshot captures the Reviewer Agent’s conclusive feedback for the refined Tesla analysis, marked in red with, “The analysis is not yet complete according to the set criteria. I recommend the Analyzing Agent include the missing specific metrics on market share, detailed performance metrics, and comprehensive insider activity details… Once these elements are addressed, the analysis will be ready for finalization and delivery to the user.” This pivotal moment, rooted in the whitepaper’s reliability and completeness principles (pp. 27-31), showcases the prototype’s autonomous evaluation and iterative process, driving toward a production-ready, fact-based recommendation as envisioned in the whitepaper’s orchestration layer (p. 7).

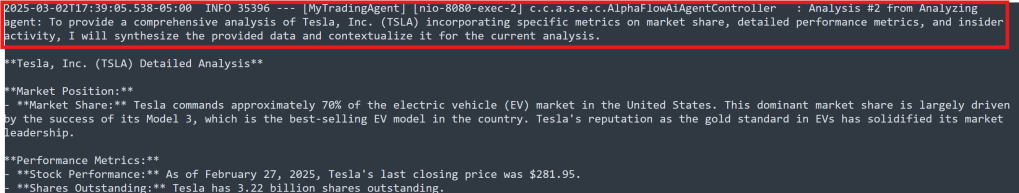

This image highlights the Analyst’s determined effort to finalize the Tesla analysis, marked in red with, “Thought: To provide a comprehensive analysis of Tesla, Inc. (TSLA) incorporating specific metrics on market position, detailed performance metrics, and insider activity, I will synthesize the provided data and contextualize it for the current analysis.” After this reasoning, the agent delivers updated data, reflecting the whitepaper’s tool usage and dynamic adaptation (pp. 12, 8-9), demonstrating how the prototype iteratively refines its output to meet the Reviewer’s criteria, advancing toward a complete, autonomous solution.

Code Walk through

The main AI framework I used here is LangChaint4J. It was another moment I realized how it was easy and simple to use but so powerful tool for the AI application development.

The tech stacks are

- LangChain4J 0.36.1

- PostgreSQL pgvector

- Tavily

- Alpha Vantage

- Spring Boot

Reviewer and Analyst Configuration

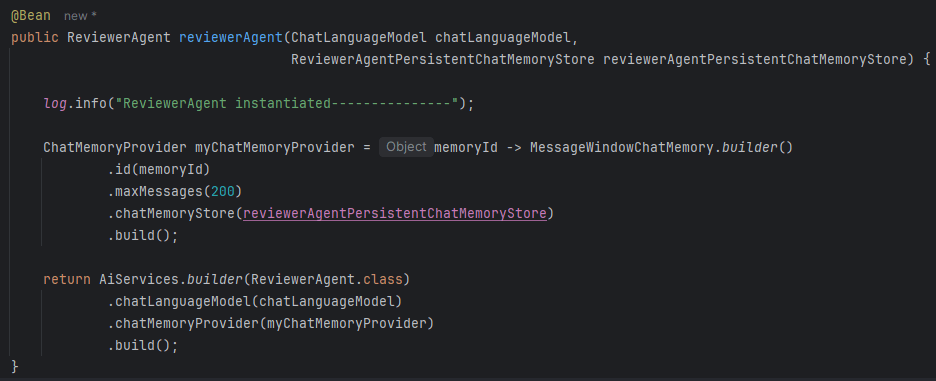

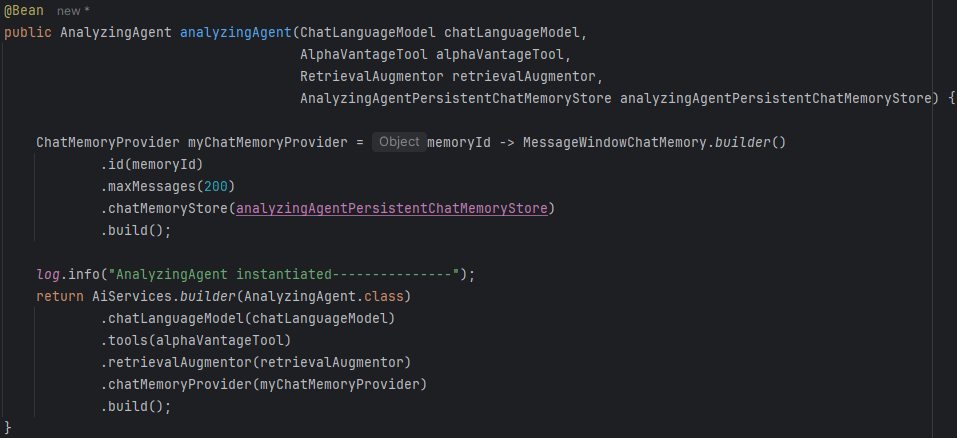

This code snippet demonstrates the configuration of two core components of my prototype: the ReviewerAgent and AnalyzingAgent, instantiated as Spring Beans using LangChain4j’s AiServices.builder, reflecting the cognitive architecture outlined in the whitepaper (p. 6).”

The reviewerAgent Bean, defined with a ChatLanguageModel and ReviewerAgentPersistentChatMemoryStore, initializes the Reviewer Agent with chat memory to maintain session history across interactions, supporting multi-turn inference as emphasized in the whitepaper (p. 8), and logs its instantiation for debugging, ensuring reliability (p. 27-31).

Similarly, the analyzingAgent Bean integrates a ChatLanguageModel, AlphaVantageTool for real-time market data, and RetrievalAugmentor for enhanced data retrieval, alongside AnalyzingAgentPersistentChatMemoryStore, enabling the Analyzing Agent to leverage tools as ‘keys to the outside world’ (p. 12) and maintain context, aligning with the whitepaper’s tool usage and session history principles (pp. 8, 12).

“Both agents use MessageWindowChatMemory with a maximum of 200 messages, configured via ChatMemoryProvider, to ensure scalable, persistent context management, demonstrating the prototype’s readiness for production-grade applications as per the whitepaper’s scalability focus (p. 38).

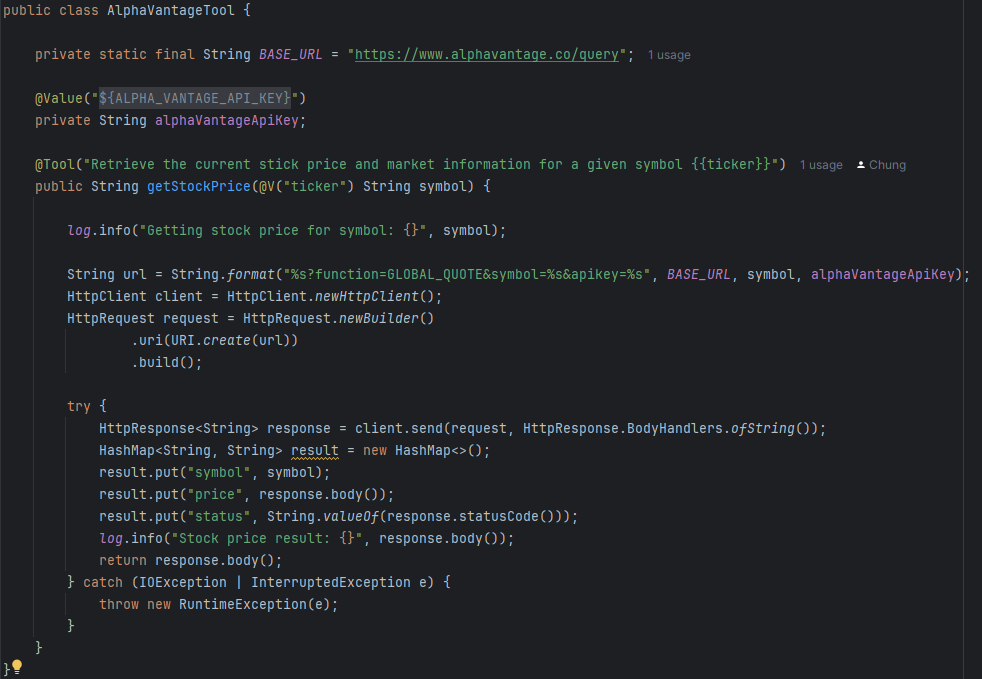

Tools Configuration

This code snippet presents the AlphaVantageTool class, a critical component of my stock analysis AI agent prototype, designed to retrieve real-time stock prices and market information. This implementation enhances the Analyst’s capability to provide up-to-date market insights, supporting the prototype’s cognitive architecture for autonomous, tool-driven decision-making, as outlined in the whitepaper (pp. 6-7).

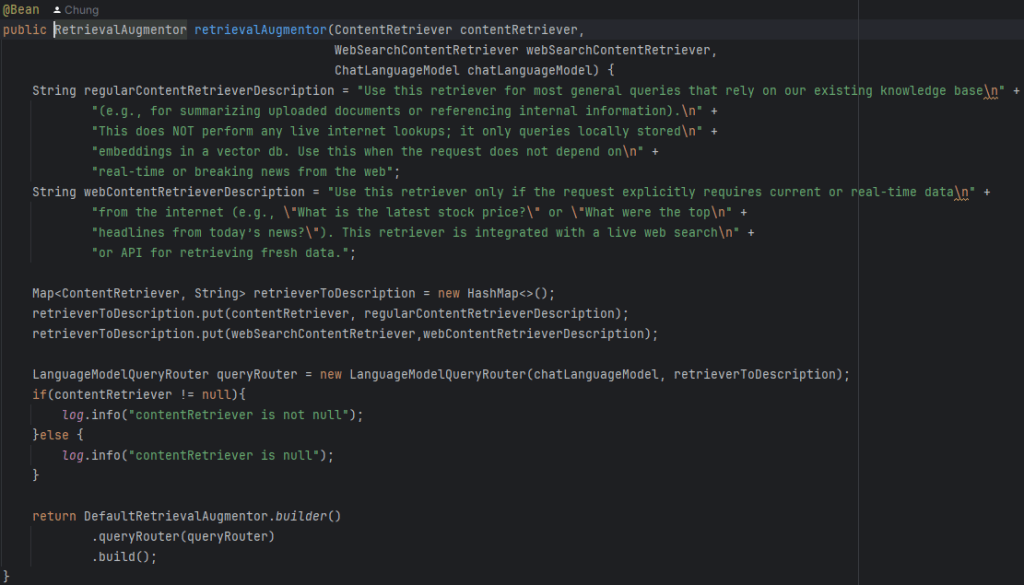

This code snippet showcases the LangChain4J’s RetrievalAugmentor class, defined as a Spring Bean in my prototype, which enhances the Analyzing Agent’s capabilities by integrating retrieval mechanisms, aligning with the whitepaper emphasis on tools as ‘keys to the outside world’ (p. 12). The RetrievalAugmentor constructor accepts a ContentRetriever, WebSearchContentRetriever, and ChatLanguageModel, configuring two distinct retrievers: a regularContentRetriever for local, vector database queries (e.g., summarizing uploaded documents) and a webContentRetriever for real-time web searches, which is Tavily, (e.g., fetching current stock prices), reflecting the whitepaper’s distinction between static and dynamic data access (pp. 12, 27-31). It uses a LanguageModelQueryRouter to dynamically route queries to the appropriate retriever based on descriptions—local for general queries not requiring real-time data, and web for explicit current or real-time data requests—demonstrating the prototype’s cognitive architecture for adaptive tool selection, as outlined in the whitepaper’s orchestration layer (p. 7). Please look up https://docs.langchain4j.dev/tutorials/rag/#retrieval-augmentor for more information about the LangChain4J’s Retrieval Augmentor.

Orchestration Layer

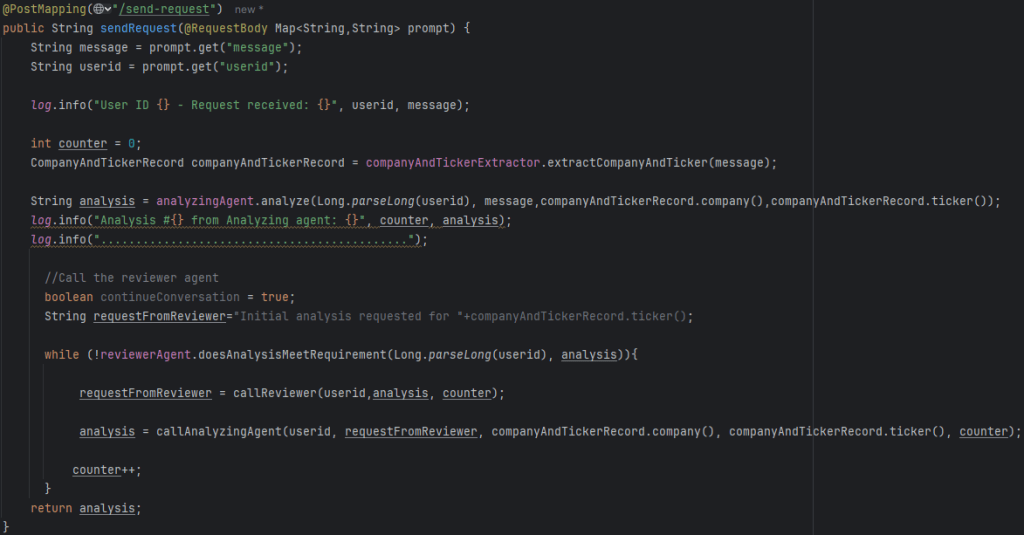

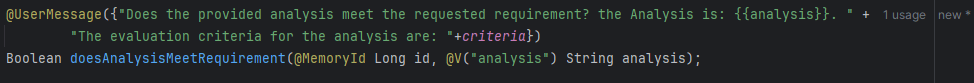

This code snippet from the sendRequest method showcases a simple yet effective orchestration layer within my prototype, reflecting the whitepaper description of the orchestration layer as a cyclical process that governs information intake, reasoning, and action until a goal is reached (p. 7).

The method initiates a loop that iteratively calls the Analyst to generate an analysis for a user-specified company (e.g., Tesla, TSLA), then passes it to the Reviewer via doesAnalysisMeetRequirement to evaluate completeness against predefined criteria, embodying the whitepaper’s emphasis on dynamic reasoning and adaptation as agents observe, plan, and act (p. 8-9).

Each iteration represents a turn in the orchestration cycle—where the Analyst gathers and refines data using tools (e.g., web searches), and the Reviewer provides feedback or confirms completion—mirroring the whitepaper’s ReAct framework (‘Thought → Action → Observation’) to iteratively refine the analysis until the goal of a complete stock recommendation is achieved (pp. 9-11).

Although this looping logic is straightforward, it encapsulates the core concept of the orchestration layer by continuing until the Reviewer Agent autonomously determines the analysis meets requirements, aligning with the whitepaper’s vision of a goal-oriented, autonomous process for production-grade AI agents (p. 38).

Possible Elements for Production-Readiness

To transform this prototype into a production-ready system, I’d consider:

- Scalability: Integrate a message queue (e.g., RabbitMQ) for asynchronous, distributed communication, handling multiple concurrent requests, as per the whitepaper’s production-scale vision with Vertex AI agents (p. 38).

- Explicit Reasoning: Add transparent reasoning outputs (e.g., “Thought: Analysis complete; Action: Finalize”) in UI, enhancing visibility per the whitepaper’s ReAct framework (pp. 9-10).

- Proactive Adaptation: Enable the Reviewer to proactively identify gaps before receiving analyses, reducing iterations and aligning with dynamic adaptation (p. 8-9).

- Robust Tool Handling: Enhance tools to handle edge cases (e.g., missing data, API failures) with retries, timeouts, or fallback data, ensuring reliability (pp. 12, 27-31).

- User Interaction: Add real-time UI streaming for user feedback, improving engagement and aligning with the whitepaper’s user-focused outcomes (p. 40).

- Performance Optimization: Use caching, batching, or advanced reasoning (e.g., Chain-of-Thought) to streamline iterations, ensuring real-time responsiveness (p. 12).

4. Conclusion

My experiment with a stock analysis AI agent prototype, inspired by Google’s “Agents” whitepaper, demonstrates the power of its cognitive architecture and orchestration layer. By implementing cyclical reasoning, tool usage, autonomy, and reliability, the prototype showcases how AI agents can collaboratively solve real-world problems like investment analysis. While it’s a promising proof-of-concept, scaling it for production requires addressing scalability, transparency, and robustness. I invite my network to provide feedback, collaborate on enhancements, and explore how these principles can drive AI innovation in finance and beyond. Together, we can build on Google’s vision to create scalable, reliable AI systems that transform industries.

Leave a comment