Introduction

It was another fun weekend diving into my AI experiments, and this time, I got my hands on LangChain4J. I was thrilled by how intuitive and powerful it turned out to be, opening my eyes to a sea of possibilities. Playing with LangChain4J wasn’t just educational; it was a blast, revealing the ease with which we can now integrate advanced AI capabilities into our Java projects. The experience was enlightening, showcasing the library’s potential to transform our approach to AI development in Java and hinting at the limitless opportunities it brings to the table.

LangChain4J is designed to simplify the AI/LLM integration process, and I’m here to demonstrate this with a straightforward example. The library offers two levels of abstraction: low-level and high-level. The low-level abstraction in the langchain4j-core library provides the fundamental building blocks, including language models, prompt templates, and Retrieval-Augmented Generation (RAG) components like embedding stores and models. This level gives you granular control to craft your applications meticulously.

On the flip side, the high-level abstraction offers declarative APIs through the langchain4j library, making application development straightforward with components like AiService and Chains.

Please click LangChain4J documents for more details.

Example

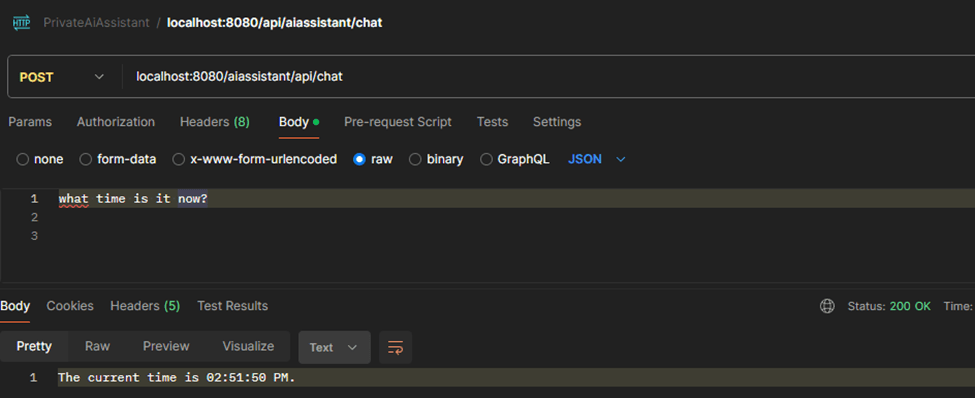

Let’s dive into how the AiService object can enhance your AI/LLM application. I’ll use a simple use case: enabling an LLM-powered application, like the one I built and integrated with OpenAI LLM, to report the current local time.

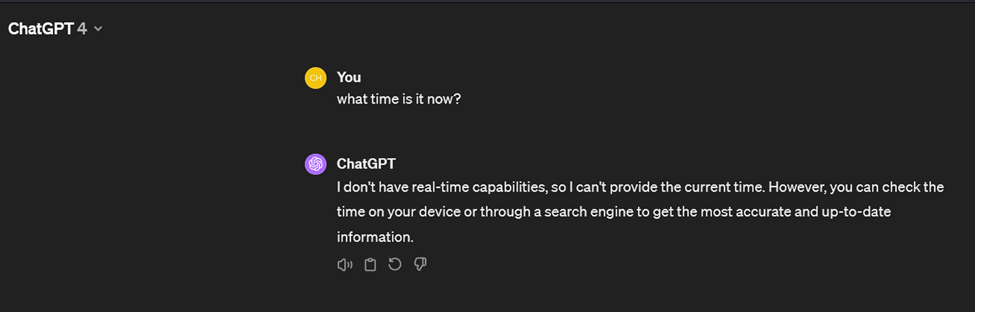

Usually, if you ask an LLM application like ChatGPT ‘What time is it?’, it can’t provide the current time due to its lack of real-time data access.

This is true for any local LLM-powered application too.

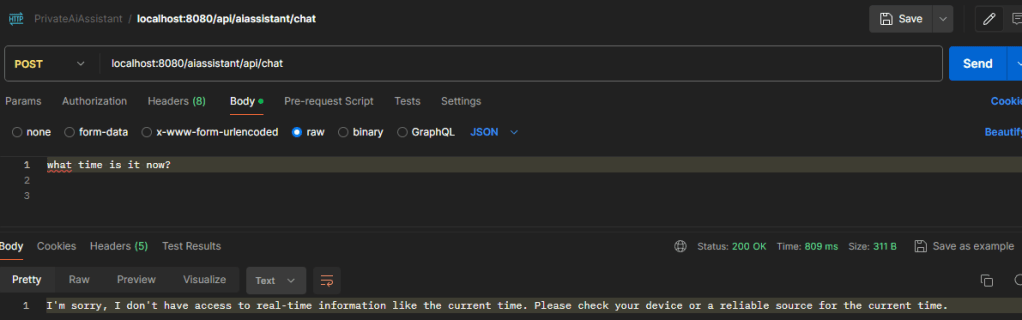

However, with the AiService object, we can overcome this limitation, as demonstrated below.

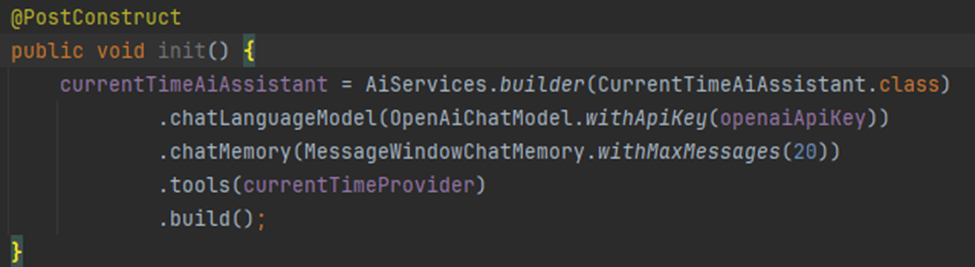

With the AiService object in LangChain4J, we can equip the LLM application with a custom logic tool to fetch and return the current local time, depending on where the application is running. This integration requires three key components:

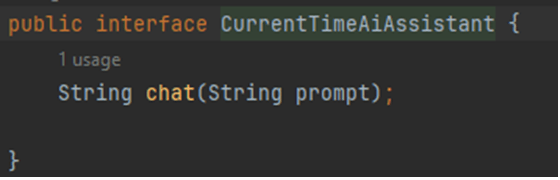

- An interface for AiService implementation: CurrentTimeAiAssistant interface

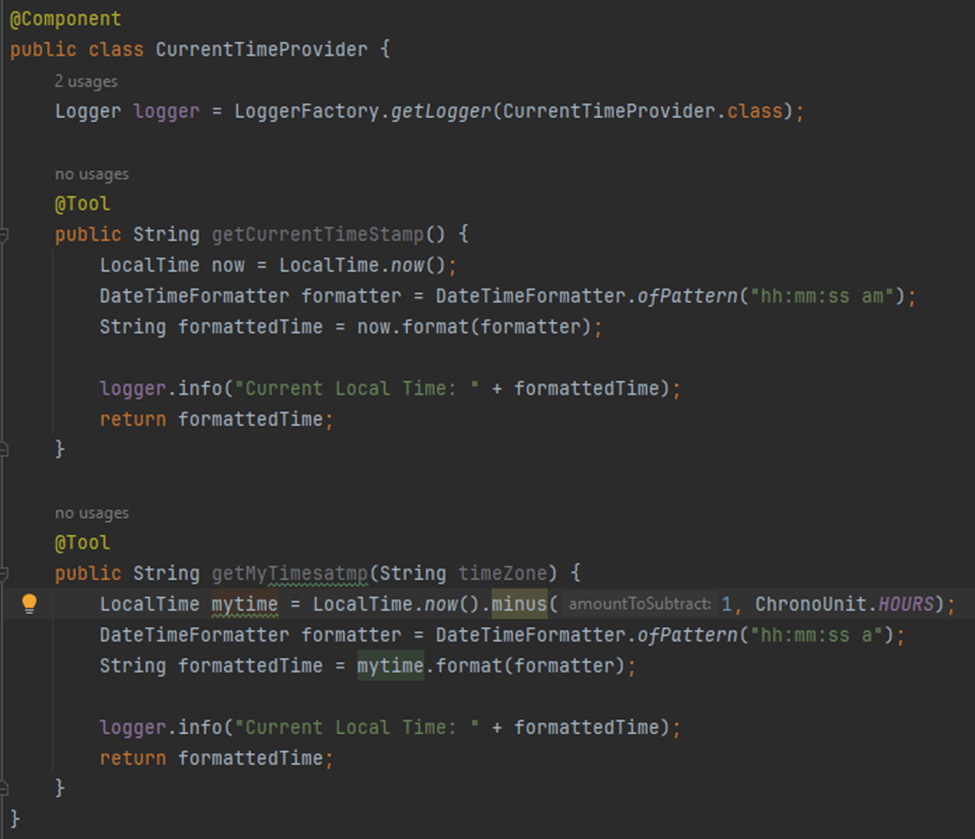

- An object with a method to fetch the current local time: CurrentTimePorvider Object

- Declarative code to define the AiService with tools to use: The AiService is configured with CurrentTimeProvider and CurrentTimeAiAssistant.

Once set up, if you query through the below API in our application, it will now provide the current time accurately.

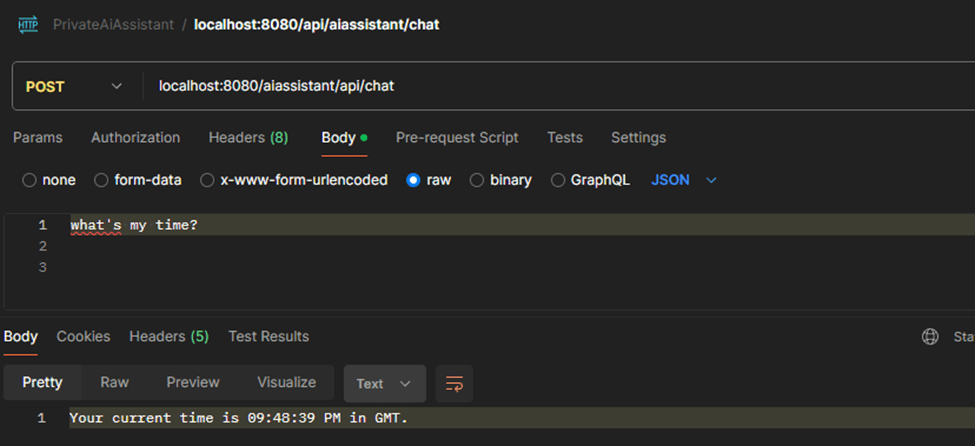

What’s truly remarkable is how the LLM application knows when to utilize the tool. By injecting the CurrentTimeProvider object into the AiService using the tools() method, the correct method to invoke based on the query is automatically determined. I didn’t have to link any specific questions to the local time functionality programmatically; it just intuitively understands what to call depending on the questions asked. This level of automation and intelligence in handling queries showcases the advanced integration capabilities of LangChain4J, making the development process not only easier but also more dynamic.

To illustrate, I added a getMyTimestamp() method to the CurrentTimeProvider object, which returns the time minus one hour. When I ask, “What is my time?”, it smartly calls getMyTimestamp() to give the adjusted time.

Please see the complete code example in GitHub.

Conclusion

I could clearly see how LangChain4J would empower Java developers to build AI applications that were once deemed complex or unfeasible. The simplicity and flexibility of LangChain4J open up unlimited possibilities, allowing us to enhance applications with intelligent, real-time capabilities effortlessly. As we continue to explore and push the boundaries of what’s possible, LangChain4J stands as a testament to the power and potential of AI in the Java ecosystem.

Leave a comment